ASU researchers improving human life with AI earn NSF CAREER Awards

Two faculty members in Arizona State University’s Ira A. Fulton Schools of Engineering have earned the highly competitive and prestigious Faculty Early Career Development (CAREER) Award from the National Science Foundation.

Heni Ben Amor and Yezhou Yang, both assistant professors of computer science and engineering studying robotics in the School of Computing, Informatics, and Decision Systems Engineering, represent two of ASU’s three winners. These researchers continue the long history of junior faculty receiving this honor in the Fulton Schools. Over the last five years, 30 Fulton Schools faculty have earned NSF CAREER Awards.

“I’m proud we’re continuing to attract faculty whose powerful ideas lead to discoveries of foundational value to their fields with potentially transformational breakthrough applications,” said Kyle Squires, dean of the Fulton Schools. “These awards enable our junior faculty to impact an array of critical problem sets in engineering and science and improve our nation’s future.”

The NSF CAREER Awards support the nation’s most promising junior faculty members as they pursue outstanding research, excellence in teaching and the integration of education and research. In total, Ben Amor and Yang secured more than $1 million over the next five years to make transformative advances in artificial intelligence through machine learning and human-technology collaborative systems.

“These researchers are enhancing our school’s reputation by earning highly respected honors,” said Sandeep Gupta, director in the School of Computing, Informatics, and Decision Systems Engineering — one of the six Fulton Schools. “Ben Amor and Yang have far-reaching potential to lead advances in our school’s mission and benefit society through excellence in education, research and service to the profession and community.”

Given the many fears society has regarding robots of the future, Ben Amor and Yang seek to discover fundamental technologies that will complement humans rather than replace them. Interactive robotics has the potential to assist humans and dramatically improve their quality of life.

Preventative robotics steer humans away from injury

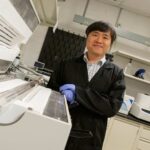

Ben Amor conducts research at the intersection of robotics and human-machine interaction. He investigates how humans and machines can work together to accomplish important tasks in service, health care and other industries.

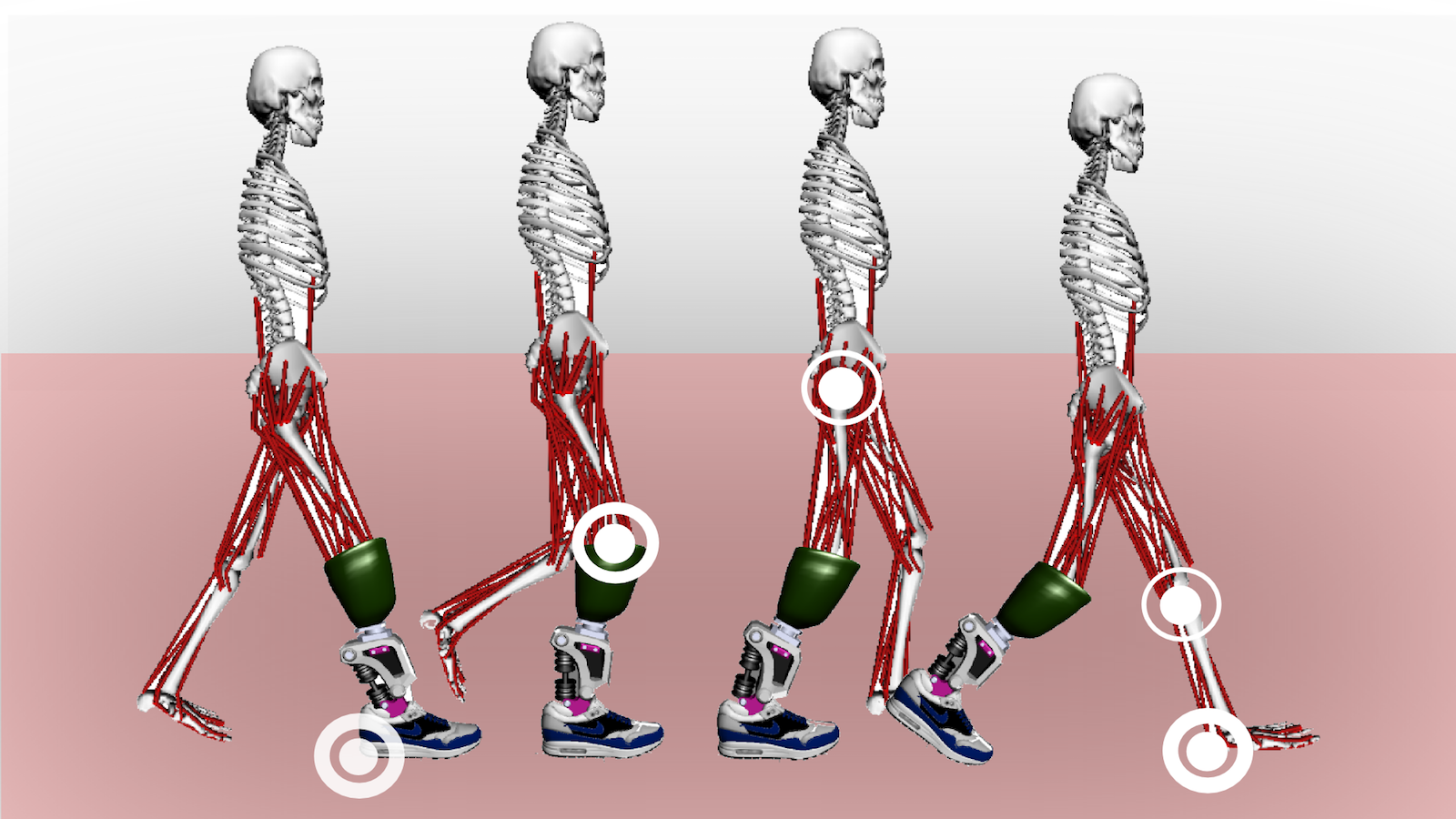

The graphic shows the biomechanics of a walking gait when a person uses a lower-leg prosthesis. The white circles indicate points of stress on the human body. Graphic courtesy of Heni Ben Amor

The five-year, $499,625 NSF CAREER Award project will focus on establishing the concept of preventative robotics to intelligently minimize the risk of physical injury. In contrast to rehabilitation robotics that focuses on therapeutic procedures after an injury, preventative robotics is a novel approach that incorporates human well-being into robot control and decision-making to steer away from injury.

Ben Amor will seek to generate and deploy assistive robotic technologies, such as a prosthesis or an exoskeleton, that seamlessly blend with actions of a human partner to achieve an intended function while minimizing biomechanical stress on the body. Combining these goals will unlock new potential for robotics to improve public and occupational health.

“The unique element is we’re developing machine-learning methods, called interaction primitives, that allow machines to predict the bodily ramifications of an action. They allow the machine to reason about how an action will affect the human partner,” said Ben Amor. “It becomes a partnership, or a symbiosis, between the human and machine rather than two different agents each having their own goal.”

Traditional prosthetic devices don’t adapt to the human user. Over time, people will change their walking gait to acclimate to their prosthesis. This translates to more stresses applied on the other leg. As a result, prosthesis wearers may lose mobility completely because the other leg sustains further injury.

This new approach of preventative robotics will be implemented on a powered-ankle prosthesis to predict internal stresses, anticipate joint loads and proactively avoid damage. The resulting prosthesis will have the potential to significantly lower the risk of musculoskeletal diseases, such as osteoarthritis.

“These devices could help many millions of people who have developed or are about to develop musculoskeletal diseases as well as people who have lower-leg amputations,” said Ben Amor. “This can help improve their quality of life and at the same time substantially reduce health care costs.”

Ben Amor’s research team will collaborate with the Mayo Clinic and SpringActive, a company developing modern prostheses that restore biological gait to people with lower limb amputations, to develop the assistive ankle device and bring it to fruition for a measurable impact on the community.

“I must admit I was completely surprised when I found out about the CAREER Award,” said Ben Amor. “The urban legend is you won’t get it on the first try. I think given the level of competition involved in these proposals, it’s really important for you to go the extra mile and maybe even the extra 10 miles to win and that’s exactly what the Fulton Schools and my colleagues at CIDSE helped me achieve.”

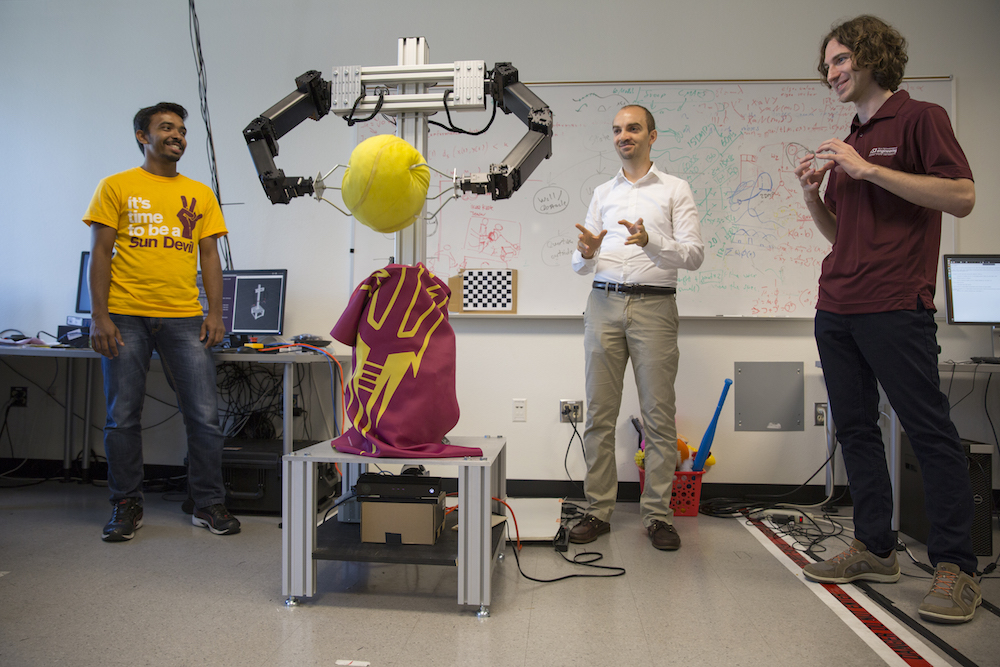

Assistant Professor Heni Ben Amor (middle), computer science doctoral student Kevin Sebastian Luck (right) and computer science master’s student Yash Rathore (left) watch as their robot tosses a ball. Ben Amor’s algorithm reduces learning time so a robot can complete the task in a matter of hours. Photographer: Jessica Hochreiter/ASU

Visual recognition with knowledge helps robots learn and adapt to human needs

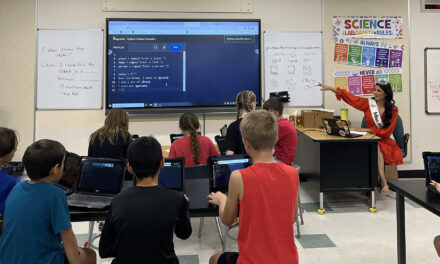

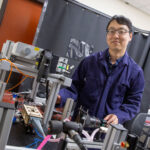

Yang’s research focuses on creating intelligent robots that can understand humans through the lens of visual perception. He studies active perception, an area of computer vision with a focus on computational modeling, decision and control strategies for robotic perception. He combines this with natural language processing and artificial intelligence reasoning to advance robotic visual learning. This improves the capabilities of a robot or any intelligent agent to make sense of a specific environment.

The five-year, $550,000 NSF CAREER Award project will address the challenging task of pairing visual recognition with knowledge. This research will attempt to enable a seeing machine to identify unknown visible concepts from previous encounters and other contextual information.

Yang said current machine learning technology has been nearly perfect at its performance. Google, DeepMind and other companies have developed systems that surpass human capacity to recognize objects on ImageNet, a large visual database of categories for use in visual recognition software research.

“The problem with the current approaches is that they typically need a pre-defined set of visual categories,” said Yang. “We want to build a system that’s able to expand its repository of visual categories from the pre-defined set.”

For instance, consider a machine that has never encountered a zebra but has recognized horses and patterns with black and white stripes. Integrating visual and linguistic information, such as a zebra is a horse-like animal with a black-and-white, striped appearance, will enable a machine to formulate a new “recognizer” for the visual concept “zebra” and acknowledge this new concept later.

“We’re trying to see if we can extend the machine’s capability to create new recognizers for new categories,” said Yang. “We need to extract knowledge in a machine-readable form, using natural language processing, knowledge retrieval and reasoning capabilities — all of which are inspired by human behavior and thinking. We also want to test this capability on a physical active agent to detect whether our system can generalize its visual recognition capabilities beyond the purest model.”

Yang’s project will lay the foundation for the development of robust personal mobile applications and service robots, such as visual assistants for people with impaired vision and/or voice-enabled agents for elder care.

“Ideally, we want to create more adaptive active agents with perception capabilities,” said Yang. “These agents will adapt to the user’s behavior, preference and specific requirements to better serve their needs.”

Yang said he’s excited about the opportunity to start this new avenue of research with support from the NSF CAREER Award. This is a project he’s dreamed of conducting at ASU, especially in the Fulton Schools.

“I really appreciate the environment here at the university within the Fulton Schools,” said Yang. “They grant the junior faculty enough support, great professional mentoring, freedom and time to discover and explore their ideas while promoting interdisciplinary collaborations among faculty members, which is very unique. That’s why I enjoy doing research at ASU.”

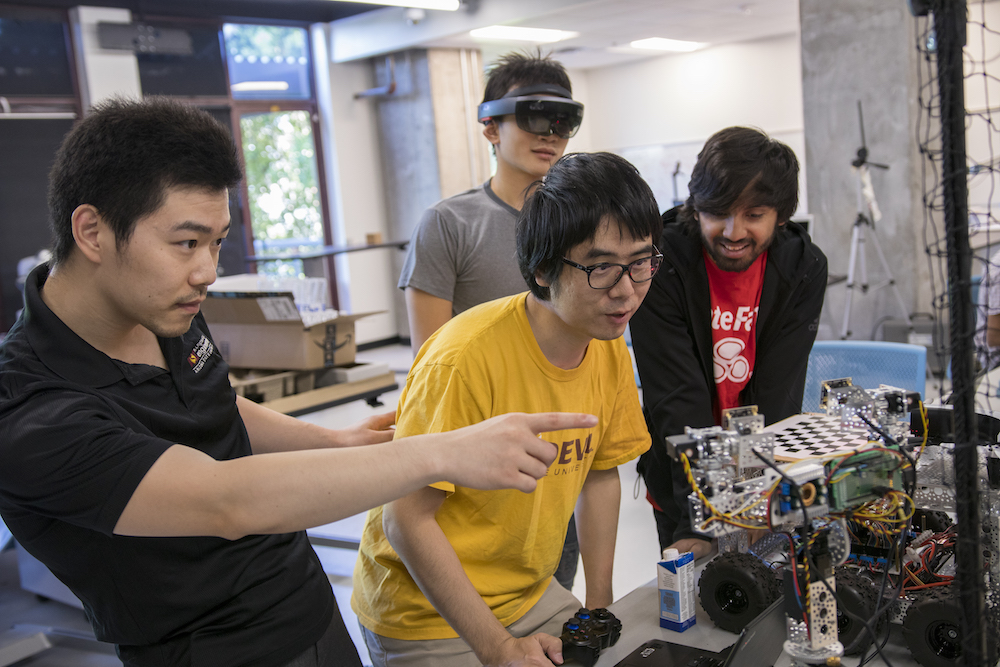

Assistant Professor Yezhou Yang (left), computer science doctoral student Duo Lu (middle) and computer engineering graduate student Anshul Rai (right) calibrate their head-neck cyber-physical platform for developing the active perceiving system while computer engineering graduate student Shibin Zheng (back) wears HoloLens to test an augmented reality-based, human-drone system. Photographer: Marco-Alexis Chaira/ASU