ASU researchers add human ingenuity to automated security tool

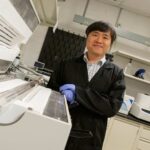

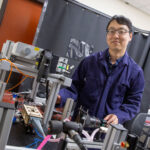

Above: Arizona State University Assistant Professors of computer science Yan Shoshitaishvili (left) and Ruoyu “Fish” Wang (on screen) in the Laboratory of Security Engineering for Future Computing, or SEFCOM. Shoshitaishvili, Wang and a multi-university team earned an $11.7 million award from the Defense Advanced Research Projects Agency's Computers and Humans Exploring Software Security, or CHESS, program to develop a human-computer collaborative approach to cybersecurity, which they call Cognitive Human Enhancements for Cyber Reasoning Systems, or CHECRS. Photographer: Erika Gronek/ASU

The world’s top chess player isn’t a human or a computer, it’s a “centaur” — a hybrid chess-playing team comprised of a human and a computer.

The Defense Advanced Research Projects Agency is looking to apply the same human-computer collaborative approach to cybersecurity through its Computers and Humans Exploring Software Security, or CHESS program.

A team of researchers in the Ira A. Fulton Schools of Engineering at Arizona State University is working with collaborators at the University of California, Santa Barbara, the University of Iowa, North Carolina State University and EURECOM to make their move in this space. The team’s project is called CHECRS, or Cognitive Human Enhancements for Cyber Reasoning Systems.

The $11.7 million award supports the multi-university CHECRS team’s efforts to create a human-assisted autonomous tool for finding and analyzing software vulnerabilities that also learns from and incorporates human strengths of intuition and ingenuity. The ASU team, led by Ruoyu “Fish” Wang, an assistant professor of computer science and engineering in the Fulton Schools, received $6.6 million of the award funding.

Wang credits the School of Computing, Informatics, and Decision Systems Engineering, one of the six Fulton Schools, and its strong focus on cybersecurity research, academic concentrations, publications and faculty hires as driving forces behind the work that won the DARPA award.

“This DARPA award, together with the Harnessing Autonomy for Countering Cyberadversary Systems (HACCS) award we received last year, support CIDSE’s development strategy,” Wang says. “They will also help make the Laboratory of Security Engineering for Future Computing (SEFCOM) one of the top security research labs in the country.”

Wang also attributes their success to the Global Security Initiative at ASU, which offered immense help in getting the DARPA award.

“Seriously, we wouldn’t have got this award without GSI’s assistance,” Wang says. “Their extensive knowledge and experience on working with government agencies are crucial to securing awards like this.”

During a time when reports of nearly weekly security and data breaches occur due to software vulnerabilities — which are a natural part of coding — the success of this DARPA research program lies in the teams’ ability to build systems that will find and mitigate these costly mistakes before they can be exploited for nefarious purposes.

As in a game of checkers, defenseless pieces are inevitable, but the CHECRS team is developing a way for humans and computers to work together to identify vulnerabilities before black-hat hackers or other bad actors have the opportunity to make a play.

“It’s a lot of responsibility,” says Yan Shoshitaishvili, an assistant professor of computer science and engineering and co-principal investigator on the project. “It’s a big undertaking that the government is making, and we have a lot of responsibility to make it a success. I have no doubt we’ll be successful.”

Autonomous tools can do the job, but they’re novice players

During the 2016 DARPA Cyber Grand Challenge in Las Vegas, a packed crowd watched seven computers on a stage just sitting there, blinking. It was an exciting day.

The computers were autonomously fighting a cyber war against each other. They were executing the results of years of research by teams of computer scientists who had created autonomous cyber reasoning systems that could analyze software systems, find vulnerabilities, create proofs of vulnerabilities and fix them automatically — all without human interaction.

Wang and Shoshitaishvili, then both graduate students at the University of California, Santa Barbara, were on one of the seven finalist teams, Shellphish. Captained by Shoshitaishvili, Shellphish earned third place in the competition, but the team also left with the seed of an idea.

“Since then we’ve been thinking about the concept of human-assisted cyber autonomous systems,” Shoshitaishvili says. “We had the realization that if you have both an autonomous system and a principled way to reinject human intuition, which these machines lack, you can create something better than the sum of its parts.”

Human qualities make for the best of both worlds

It’s important for a cyber reasoning system to be able to function autonomously — it demonstrates machines can do all of the work, if necessary. Also, computing power is cheap, can scale easily and work constantly. However, Shoshitaishvili likens these autonomous tools to 1990s chess-playing computers — able to win sometimes, but not with the frequency and skill of a chess-playing human champion like Garry Kasparov.

Unlike chess, a game with a well-defined set of rules that can be efficiently mastered by machines, software programs are a lot more complex.

“While modern automated tools run on computers that calculate billions of times faster than a human brain, human security analysts still find the majority of software vulnerabilities,” Wang says. “This is because the knowledge and intuition that humans possess outweigh the speed of calculation when facing problems with extreme complexity, for example, finding software vulnerabilities.”

Since humans are available to help with security analysis, we might as well work together.

Automated tools already exist to help expert security researchers and white-hat hackers (those working for good) detect vulnerabilities. But these tools are only useful to an elite few.

The CHECRS team wants to create an autonomous tool that can be used by a wider variety of human assistants. Software developers, quality assurance specialists and other non-security-experts have human intuition and ingenuity that can meaningfully aid the automated tool.

When humans of varying skills and expertise are at work, or when the machine needs help connecting dots using intuition, the automated tool can delegate tasks it’s not good at to the humans while it switches over to other tasks computers are optimized to perform.

Not only do humans help in the moment, the automated tool will incorporate what it learns from human contributions to continuously improve upon itself — both in how it interacts with its human partners and in its own ability to accomplish tasks.

In achieving this ability to work together and learn from one another, the CHECRS team will meet the first two of the five DARPA CHESS program goals: pulling in human assistants to the autonomous cyber reasoning system (with efforts led by Shoshitaishvili) and getting machines to understand software in ways humans do (led by Wang).

“As we observe instances of humans helping the machine, can we learn from that using machine learning or by observing and trying to recreate [human capabilities] algorithmically and reproduce it in the machine itself?” Shoshitaishvili asks. “Our expectation is yes.”

Working toward a more secure future

In additional steps of the DARPA CHESS program, other research teams will evaluate whether the human-computer teams are working effectively by competing against the system to detect vulnerabilities.

Professional security analysts will form teams, called control teams, for these week-long competitions. At this point, it remains to be seen what types of vulnerabilities the human-computer teams will be good at finding and fixing. However, CHECRS won’t feel lonely: Some technologies underlying CHECRS, such as the binary analysis platform called angr, could also be employed by control teams.

If the team is successful and vulnerabilities can be automatically detected and people can be alerted to them in useful ways, or if they can even be fixed automatically, cybersecurity breaches and politically motivated hacks could become a thing of the past. Security troubles won’t be what they are today.

“Understanding programs and finding vulnerabilities has always been an art that is only mastered by a small group of elites. But no one wants security to be an art,” Wang says. “In the CHESS program, the CHECRS team regards vulnerability discovery as a scientific problem — which it should have been — and is a steady step toward making software and our world much more secure.”

Shoshitaishvili adds, “We could look at a world where entire classes of vulnerabilities are wiped out because all of the software was analyzed by automated systems,” noting it would require advances beyond the scope of the DARPA CHESS program.

The ability to create a tool that is scalable — to analyze more and more varieties and amounts of software beyond the scope of the DARPA CHESS program — is a huge part needed to realize such a future.

“If the system could use human assistance as needed to always be functioning, always be pushing toward a goal and never get stuck due to its limitations,” Shoshitaishvili says, “it’s hard to overstate how useful that will be.”

This research was developed with funding from the Defense Advanced Research Projects Agency (DARPA). The views, opinions and/or findings expressed are those of the authors and should not be interpreted as representing the official views or policies of the Department of Defense or the U.S. Government.

U.S. and European researchers collaborate on CHECRS project

The Arizona State University CHECRS team includes six top researchers in cybersecurity-related fields from the School of Computing, Informatics, and Decision Systems Engineering, one of the six Ira A. Fulton Schools of Engineering.

Yan Shoshitaishvili, an assistant professor of computer science, will focus on work to integrate human assistance into an autonomous cyber reasoning system in a controlled and principled way for best results.

Ruoyu “Fish” Wang, an assistant professor of computer science, will focus on improving the performance of state-of-the-art program analysis techniques and equipping them with autonomous but human-like capabilities of solving security problems.

Tiffany Bao, an assistant professor of computer science and engineering, is an expert in game theory in cyber reasoning systems and how cyber reasoning systems plan their actions. Her work won the National Security Agency’s cybersecurity paper competition last year.

Chitta Baral, a professor of computer science and engineering, is helping bridge Shoshitaishvili and Wang’s work through machine learning research that looks at how knowledge passed back and forth between human and machine is represented.

Adam Doupé, an assistant professor of computer science and engineering, helps to expand the tool’s abilities beyond web browser vulnerability and security to web pages and mobile applications to push their capabilities to a wider scope. Doupé is also associate director of the Center for Cybersecurity and Digital Forensics.

Stephanie Forrest, a professor of computer science and engineering who is also a professor in the ASU Biodesign Institute, conducts research that explores biological features of software, and will help the CHECRS team give software the ability to mutate like a living organism to develop an immunity to vulnerabilities. This will help automatically find and fix vulnerabilities in software before release to the public.

For the first part of the DARPA CHESS program project, Shoshitaishvili is working with North Carolina State University and EURECOM, a graduate research institute in France.

Alexandros Kapravelos, an assistant professor of computer science at NCSU, brings expertise in web and browser security. This will help the team achieve their goal of analyzing real software, in particular extremely complex web browsers like Google Chrome.

At EURECOM, Yanick Fratantonio, an assistant professor of digital security, and Davide Balzarotti, a professor of digital security, will provide insight into how expert versus non-expert humans approach software and interact with software interfaces.

Wang is collaborating with the University of California, Santa Barbara and the University of Iowa on part two of the DAPRA CHESS program project.

Antonio Bianchi, an assistant professor of computer science at the University of Iowa, will assist Wang through his expertise in mobile security vulnerabilities and analysis. This will allow the automated tool to tackle issues in complex software, such as fingerprint sensor applications and voice assistants.

Wang will collaborate with program analysis researchers at his alma mater, the University of California, Santa Barbara, where Shoshitaishvili and Doupé also conducted their graduate research and where the Shellphish team emerged to earn third place at the DARPA Cyber Grand Challenge.

Christopher Kruegel and Giovanni Vigna, both professors of computer science at the University of California, Santa Barbara, are leading contributors to cybersecurity research in the past decade and bring valuable experience and expertise into program analysis to complement the ASU team.