Scientists work together to move robotics forward

Posted: September 22, 2010

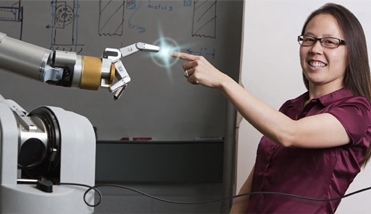

Veronica Santos, an assistant professor of mechanical engineering, demonstrates the capabilities of a robotic hand being developed in her Biomechatronics Lab.

Editor’s note: This article by contributing writer Christopher Vaughan is the cover story of the recent edition of ASU Magazine. It features a group of the university’s engineers and scientists in the Ira A. Fulton Schools of Engineering, the School of Life Sciences, the School of Earth and Space Exploration and the College of Technology and Innovation who are working on the leading edge of advanced robotics.

Our society has grown accustomed to robots in its midst: for decades now, they have been used to assemble cars, check the welds on underground pipes, investigate and defuse bombs, and perform many other tasks that are difficult or dangerous for humans to do.

Still, robots are not anything like the super-intelligent humanoids that science fiction writers of the 1950s thought we would have by now.

For the most part, today’s robots are capable of performing only very limited jobs, working under the close supervision of a human being.

ASU researchers in fields ranging from engineering and physics to medicine and biology are developing next-generation robotic devices with far more intelligence and autonomy. If they succeed in their quest, robotics seems poised to become an essential part of nearly every human endeavor.

Srikanth Saripalli, assistant professor in the School of Earth and Space Exploration, points out that despite the impressive capabilities of unmanned vehicles like predator aircraft or the underwater robots that have been used in the attempt to halt the flow of oil from the Deepwater Horizon rig off the Gulf Coast, these craft are still controlled by humans.

“Unmanned vehicles are not really autonomous,” Saripalli says. “There is a pilot somewhere, making them operate.”

Saripalli’s research focuses on figuring out how to cut the electronic umbilical cords that connect humans to these sorts of robots. To do so, he and fellow researchers need to solve two daunting problems: autonomous vehicles need to be able to know where they are, and they need to be able to quickly figure out what they need to do based on that information.

Resolving the first problem, known as simultaneous localization and mapping, or SLAM, is more challenging than it might seem.

SLAM can be thought of a chicken-and-egg problem: to know the position of the robot, it needs to know what’s around it, and to know what’s around it, a robot needs to know its own position.

“The biggest problem is that vision is a really rich sense, and while humans do a lot of the processing automatically, computers really don’t know how to incorporate all that data into something meaningful,” Saripalli says.

In many cases, GPS signals are available to help, but these are not available everywhere. Saripalli and colleagues are working to understand how to combine data from disparate sources, such as video cameras and inertial guidance systems, to create positional awareness.

Ultimately, a solution to these problems could lead to helicopters that land themselves on the decks of rolling ships, underwater vehicles that are let loose to map the sea floor, and robotic probes that autonomously explore planets and their moons.

Many robotics applications are more terrestrial and personal. ASU scientists Thomas Sugar and Veronica Santos are independently working on robotic solutions for prosthetic devices that replace missing body parts.

Sugar, an associate professor in the College of Technology and Innovation, has created a mechanical ankle that uses artificial intelligence to store and release energy in the joint, making walking more comfortable and efficient for those who wear the prosthetic device.

“We interpret the motion (of the ankle) using a computer algorithm to determine how to pull on the spring, which acts as our artificial Achilles tendon,” Sugar says. The algorithm on board the artificial ankle makes that decision 1,000 times a second to allow the most efficient walking motion.”

Santos, an assistant professor in the Ira A. Fulton Schools of Engineering, has been at work creating an artificial hand possessing a sense of touch. Putting intelligent sensors in the robotic hand can make it much more able to grasp delicate objects without breaking them or move with objects without dropping them. It also more closely parallels what actually goes on in the human nervous system.

“When people think of intelligence they tend to think of the brain, but there are a lot of levels of intelligence,” Santos says. “If you are at a party and holding a bottle, and someone hits the bottle, there is an immediate reaction from your fingertips that you don’t even think about. Neuroscience studies suggest that these early responses are spinally mediated.”

Santos is also working with Stephen Helms Tillery, an assistant professor in the Ira A. Fulton Schools of Engineering, on ways to integrate signals from sensors on an artificial hand with a person’s central nervous system.

“If you could stimulate the nervous system to produce a conscious sensation of tactile feedback … you could have an artificial hand and feel what you are touching,” she says.

Stephen Pratt is another researcher who is looking to the natural world to make robots more intelligent and autonomous. An assistant professor of biology in the School of Life Sciences, Pratt is working with the U.S. Department of Defense and associates at the University of Pennsylvania and Georgia Tech to design robots and control them using factors similar to those at play in animal social networks.

Pratt’s team looks at how social animals like ants, bees or wolves solve problems and attempts to develop computer algorithms that let robots do the same.

“One thing robots don’t do well is respond to unpredictable or changing conditions,” Pratt says. “Ants are good at recruiting groups of two to 20 and working cooperatively to move large objects over rough terrain.”

Through the motions of the object they are moving, individual ants are able to tell if they have to stop pulling and start pushing or simply let go, he explains. If the ants come across a barrier, they are able as a group to work out a way to go over or around it.

“Part of what engineers would like to do is find out why this system is so robust.”

Pratt’s work could lead to the creation of teams of small robots that work together in harsh environments such as in space, undersea or on the battlefield.

The attraction of teams of robots is that they are much more resilient than single machines. With many smaller, cheaper robots, it doesn’t matter if some fail, because the others are programmed to take up the slack – much like the team of ants that adapt when one ant loses hold.

Another advantage, Pratt points out, is that it is easy to scale up. When ants want to move a larger object, they recruit more team members. If the size of a job grows, there is no need to build a new and bigger robot, just add more little robots.

Like Pratt’s ants, researchers at ASU are acting independently but working together to move robotics forward.